Kunsan National University - South Korea

Assistant Professor, Computer Science Department

As an Assistant Professor at Kunsan National University, I instruct undergraduate courses in interactive computer graphics and game engine programming.

In addition to teaching, I conduct research on approaches to integrate XR technologies for knowledge work in a desktop setting.

My duties also include mentoring students on career development and academic progress.

I also advise students on the development of gesture-based installations for department promotions.

University of Texas - Austin, TX

Postdoctoral Researcher, Texas Advanced Computing Center (TACC)

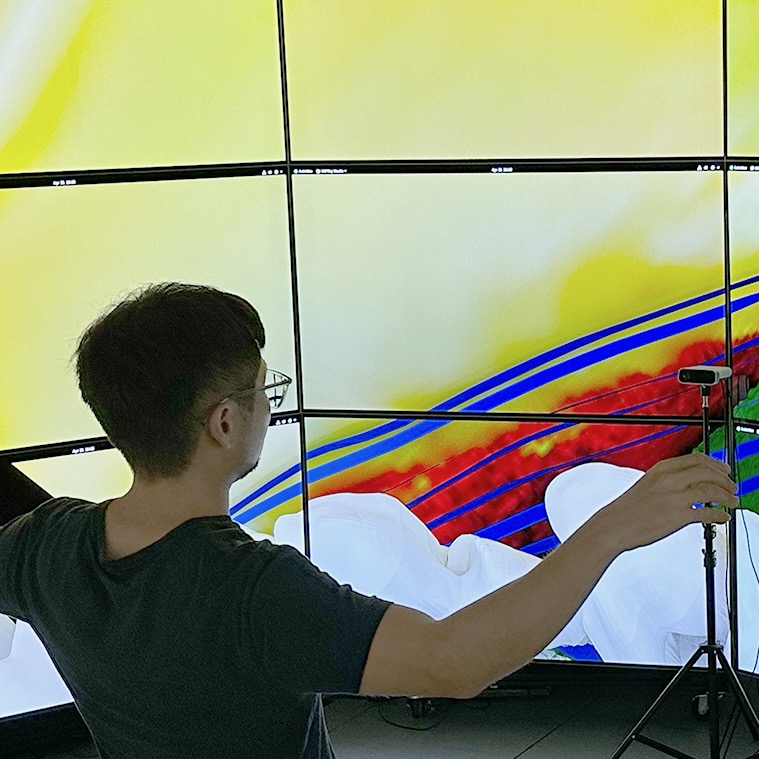

At the visitor center, there are high-res tiled displays that show images and videos from visualization projects that researchers at the center have worked on.

My task is to bring interactive 3D content into the systems to provide visitors with engaging methods to look at ongoing work.

Working with software engineers at Intel and research scientists at TACC, I upgraded Intel's raytracing application to display a single, coherent 3D virtual environment on the tiled displays and added support for gesture-based interaction.

We created a proof-of-concept prototype that enables a user to move around a 3D virtual environment by lifting both hands - pretending to be a bird - and leaning the body to fly in that direction.

Collaborative Results

Gwangju Institute of Science and Technology - South Korea

Research Engineer, Korea Culture and Technology Institute (KCTI)

During my stay in South Korea, I worked in a lab that works closely with museums to support new generations of public exhibitions.

My task was to develop interactive installations for museums by closely working with experts from other fields, e.g., graphic designers, data curators, and historians. I developed visualization and interaction techniques for use by museum visitors to explore museums' archived data using gesture-based interaction.

To ease the process of integrating assets created by designers and data curators, I implemented features to load the assets and populate 3D scenes and GUIs.

By refactoring the code, I also provided a codebase for gesture-based interaction in Unity, which enabled another developer to create separate interactive applications. During my stay, I helped create two interactive installations presented at public venues (each lasted about a week).

Collaborative Results

-

"The Road of Hyecho" - Interactive installation at Gwangju Cultural Foundation

(news)

-

"The Road of Ramayana" - Interactive installation at Asia Culture Center

(video,

news,

news)

-

"Effects of Age and Motivation for Visiting on AR Museum Experiences"

(10.1145/3359996.3364711)

University of Minnesota - Twin Cities, Minneapolis, MN

Research Assistant, Interactive Visualization Lab (IVLab)

During my Ph.D., I worked with experts from other fields, e.g., geology, medical devices, neuroscience, and health.

My task was to create 3D interactive systems to assist these experts with analyzing and presenting their data.

Mainly, I worked on creating data-driven 3D virtual environments and integrating VR/AR technologies to enable these experts to immerse in data (and look for findings and confirm hypotheses).

Also, focusing on public-facing content, I worked on creating VR solutions to make these technologies accessible to the public for training and education.

These works were presented at IEEE VR and VIS conferences.

Collaborative Results

-

"Worlds-in-Wedges: Combining WIMs and Portals to Support Comparative Immersive Visualization of Forestry Data"

(10.1109/VR.2019.8797871)

-

"Linked View Visualization Using Clipboard-Style Mobile VR: Application to Communicating Forestry Data"

-

"Hybrid Data Constructs: Interacting with Biomedical Data in Augmented Spaces"

(10.5040/9781350133266.ch-011)

-

"Spatial Correlation: An Interactive Display of Virtual Gesture Sculpture"

(10.1162/LEON_a_01226)

-

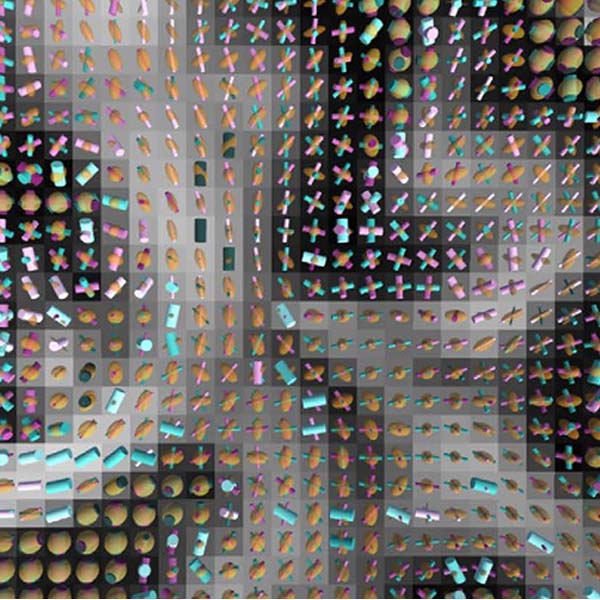

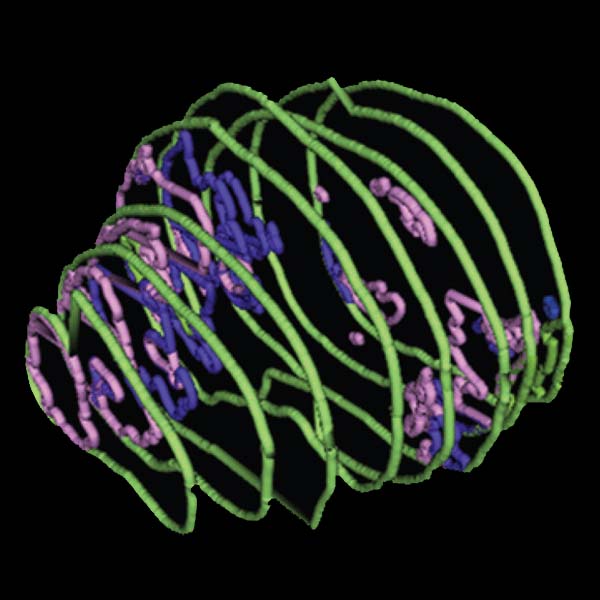

"Microstructure Imaging of Crossing (MIX) White Matter Fibers from diffusion MRI"

(10.1038/srep38927)

INRIA - Scalay, France

Research Intern, Analysis and Visualization Lab (AVIZ)

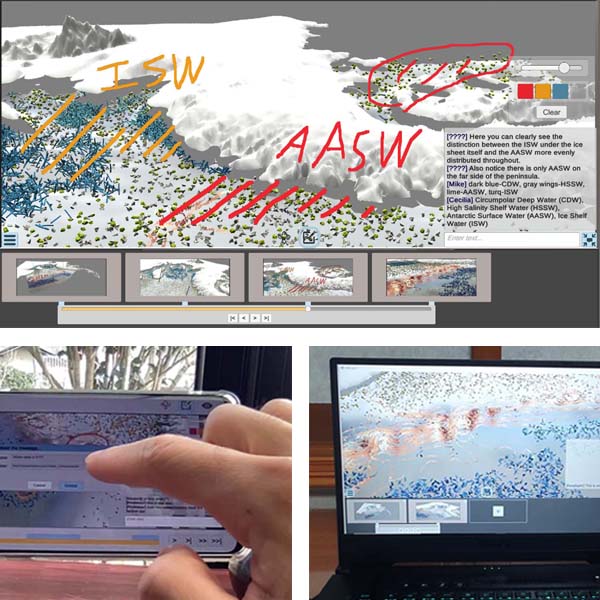

Scientific visualization applications are often complex, requiring substantial expertise in how to use software features.

My task was to find ways of facilitating team-science collaboration even when members have different levels of expertise.

Our approach is to provide different ways of viewing/interacting with data in one collaborative framework.

Data can simply be viewed from a video file created using animation features we developed.

More engaged users can load the same video file in a visualization application and thoroughly explore the depicted data.

Traveling users can see the video file in our custom video player and sketch or leave comments on data views.

With this framework, users can pick and choose a client based on their needs and situations, and importantly, changes made in these clients are made back to the original data, so everyone is in sync.

This work was published in TVCG.

Collaborative Results

University of Minnesota - Twin Cities, Minneapolis, MN

Programmer, Center for Magnetic Resonance Research (CMRR)

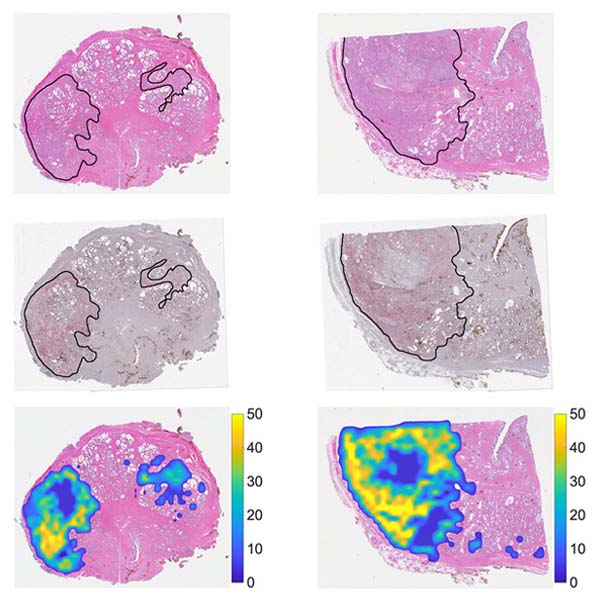

When a patient is diagnosed with prostate cancer, the organ is taken out of the body.

To study cancer, pathologists cut the prostate into slices and further cut it into subsections to be able to scan these tissues with scanning devices.

My task was to develop a series of tools to assist pathologists with reconstructing a prostate volume and making further annotations.

I helped create a Photoshop-like application that enabled pathologists to stitch back these scanned images and draw cancer boundaries.

I also implemented output features to save these annotated slice images to a file format for further data analysis.

Researchers in the lab used these annotated data to create models for detecting prostate cancer; their works were published in Nature Scientific Reports and Radiology.

Collaborative Results

-

"Signature Maps for Automatic Identification of Prostate Cancer from Colorimetric Analysis of H&E-and IHC-stained Histopathological Specimen"

(10.1038/s41598-019-43486-y)

-

"Detection of Prostate Cancer: Quantitative Multiparametric MR Imaging Models Developed Using Registered Correlative Histopathology"

(10.1148/radiol.2015151089)